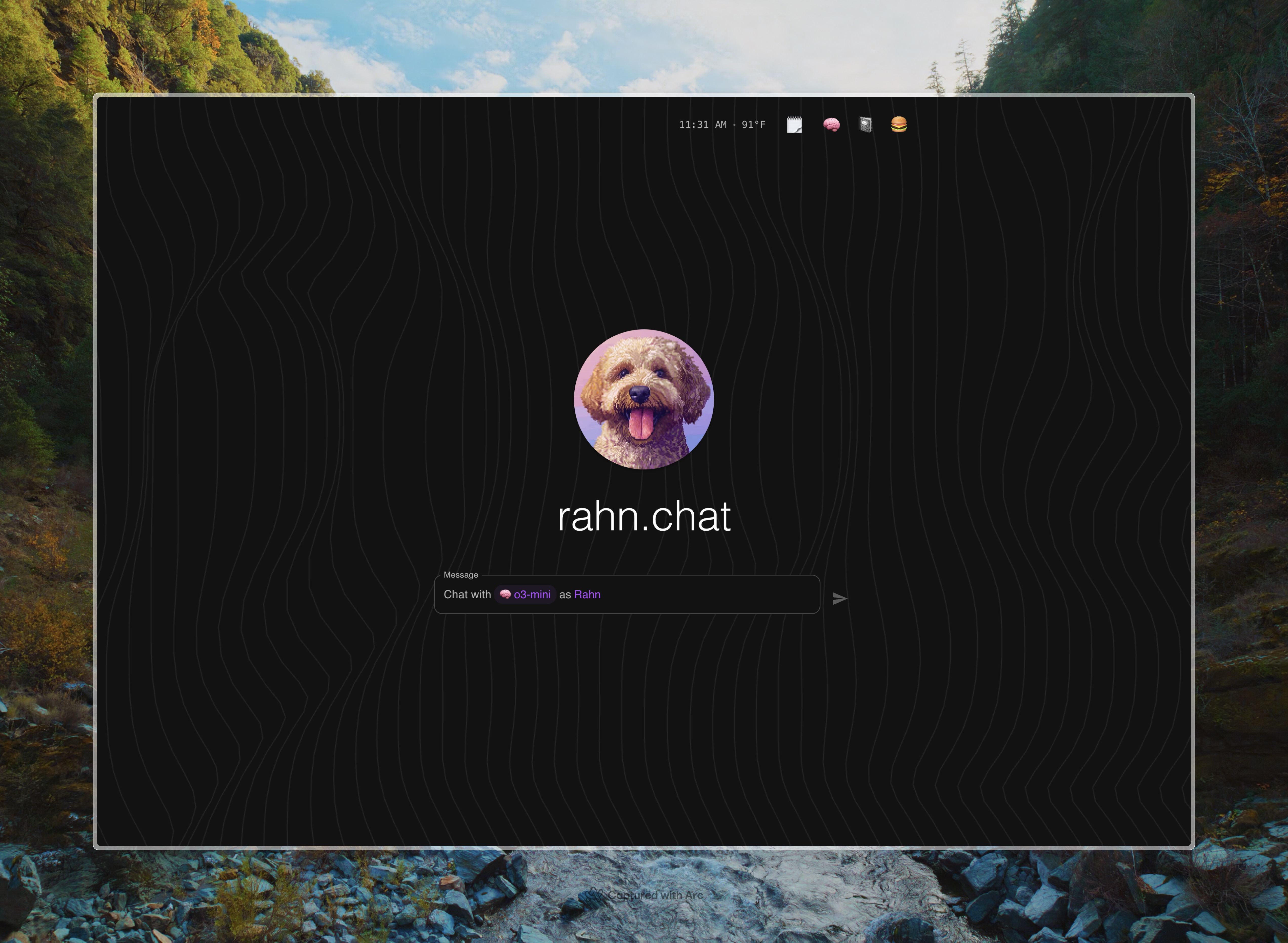

rahn.chat - Privacy-First AI Chat Platform

Building Rahn.chat: A Weekend Experiment in AI-Powered Development

What started as a Saturday morning coffee and tech news session turned into a 36-hour build sprint that would become rahn.chat – among my most comprehensive side projects to date (that others can use 😅). After seeing yet another venture-backed AI chat wrapper launch with considerable fanfare, I couldn't help but think: "How hard could this really be?"

Turns out, not that hard when you have the right AI tools at your disposal.

The Build: From Concept to Deployment in 36 Hours

Armed with OpenAI's o1 for architectural planning, Cursor.sh with Claude Sonnet for development, and Replit for deployment, I set out to build something comparable to what teams of engineers were shipping. The result? A fully-featured AI chat interface that's been running stable for over 6 months and counting.

Rahn.chat isn't just another wrapper – it's proof of how AI is fundamentally changing who can build software and how quickly they can do it. Named after my dog Rahn (who serves as the welcoming default character), the app showcases what's possible when you combine determination with AI-powered development tools.

Key Features and Technical Innovations

The platform supports multiple AI models including OpenAI's GPT-4o family, Anthropic's Claude models, Google's Gemini, and yes, even DeepSeek (integrated on day one of their release, before certain organizational policies were in place – a testament to the agility of independent development).

What sets rahn.chat apart:

- Custom Instructions & Characters: Users can create personalized AI assistants with custom instructions and DALL-E generated avatars

- Hybrid Reasoning Models: Experimental combinations like HaikuSeek and DeepSonnet that blend different AI models for enhanced performance

- Privacy-First Architecture: All data (API keys, conversations, custom instructions) stored locally on the client device – the server maintains zero user data

- Progressive Web App (PWA) Support: Full mobile functionality that many competitors still lack

- Rolling Context Windows: Sophisticated token management across different model providers

- Voice Integration: Text-to-speech capabilities using both browser APIs and Hume.ai for custom voices

Lessons Learned and Strategic Insights

This project taught me several valuable lessons about AI development:

1. Speed to Market is Everything: What took me 36 hours to build might have taken a traditional team weeks or months. AI development tools are the great equalizer.

2. The Platform Risk: Anyone building on top of AI APIs means you're always one feature release away from obsolescence. The major platforms can and will absorb successful patterns.

3. Technical Debt vs. Innovation Velocity: While rahn.chat could use updates (it's still running older model versions), the real value was in exploring what's possible, not slowing down to maintain another production service.

4. Local-First Architecture Works: By storing everything client-side, I avoided the complexity and cost of user data management while maintaining full functionality.

While rahn.chat remains in maintenance mode (still faithfully chugging along after 6+ months), the lessons learned have informed dozens of other experiments and prototypes. Each build gets faster, more sophisticated.

Check out rahn.chat if you're curious – Rahn (the dog) is still there to greet you and show you around. Just don't blame me if you end up building your own AI project afterward.